Horses bled for antivenom, crabs drained for endotoxin tests, and silkworms boiled for silk. Science can now replace these practices with synthetic alternatives — but we need to find ways to scale them.

In many ways, this horse is normal: it stands roughly 14 hands high, has dark eyes hooded by thick lashes, and makes a contented neighing sound when its coat is stroked. But its blood pulses with venom.

For weeks, this horse has been injected with the diluted venom of snakes, generating an immune response that will be exploited to produce lifesaving antivenom. A veterinarian inserts a tube into the horse’s jugular vein to extract its blood – about 1.5 percent of its body weight – every four weeks. Each bag of horse blood is worth around $500.

Subscribe for $100 to receive six beautiful issues per year.

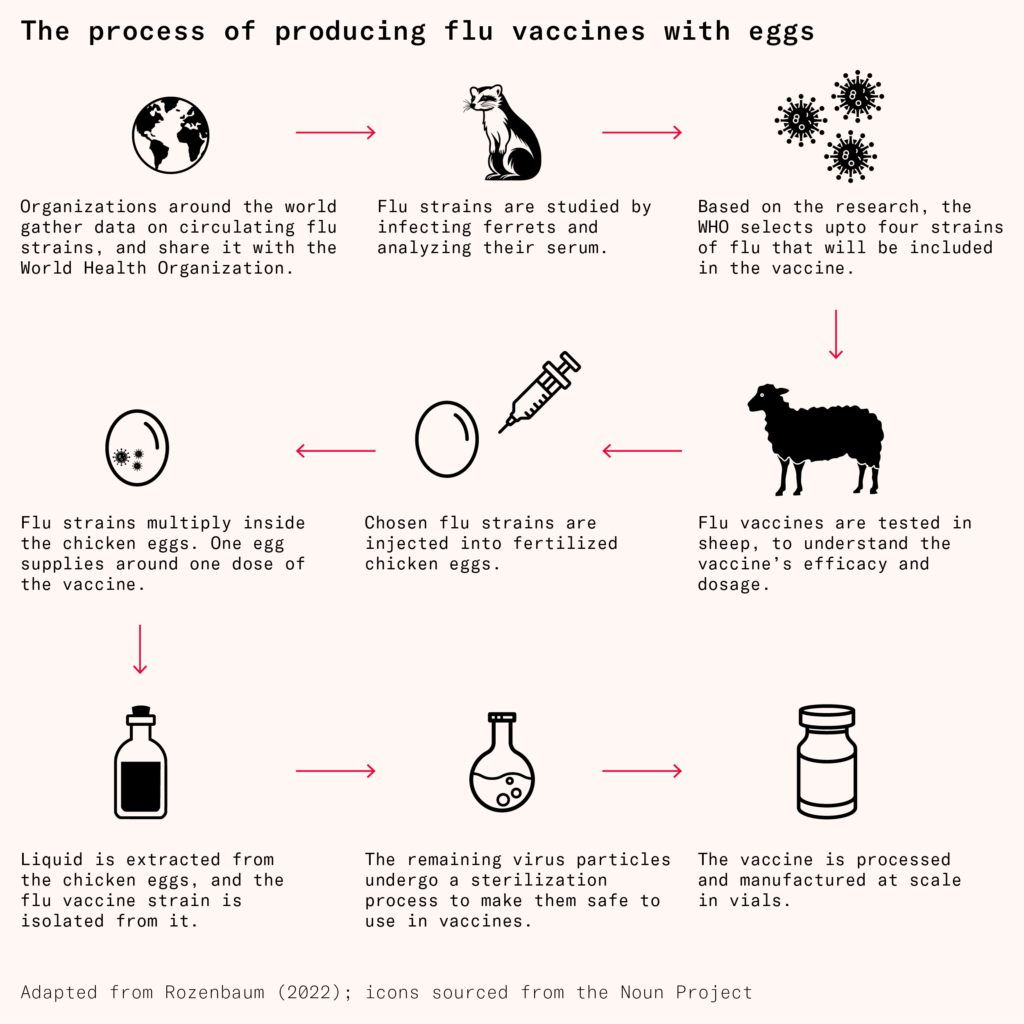

Horses are just one of the many animals we use as chemical factories: there is a veritable Noah’s ark of biopharming. Every year, over 700,000 horseshoe crabs are caught and bled. Their blood is used to test for contamination in the manufacture of medical equipment and drugs. The global vaccine industry uses an estimated 600 million chicken eggs a year to produce influenza vaccines. And we boil between 420 billion and 1 trillion silkworms every year to produce silk.

Some of these practices go back millennia. On a small coastal spit along the Mediterranean Sea, ancient Phoenicians harvested snails from which they derived a rich-hued pigment known as Tyrian purple. In a multistep process involving sun-drying and fermenting the gland that produces the color, 12,000 of these mollusks went into every single gram of dye. The complexity of its production, and therefore rarity of the product, made the dye expensive, costing approximately three troy pounds of gold per pound of dye. Tyrian purple was reserved for highly selective items such as the toga picta worn by the Roman elite.

Synthetic dyes have long since overtaken the animal-derived production of Tyrian purple. The same goes for most medicines, including insulin, which today is manufactured biosynthetically inside E. coli bacteria. But before 1978, insulin was made by harvesting and grinding up the pancreases of dead pigs from slaughterhouses. Some 24,000 pigs were needed to make just one pound of insulin, which could treat only 750 diabetics annually.

Biotechnology not only reduces the inefficiencies of collecting molecules from animals but also the harms associated with working with them in the first place. Although little is known about the welfare of the horses used in antivenom production, one study found that horses used in the manufacture of brown spider antivenom suffered from blood clots and painful lumps under their skin. Diseases also affect silkworms, killing between 10 and 47 percent of larvae, depending on the country in which they are reared.

Advancements in recombinant DNA, cloning, and biomanufacturing have reduced our reliance on animals to serve as chemical factories while leading to more precise and efficient antibodies and antivenoms. We have now reached the point where just about any molecule that has historically been made from animals can be made synthetically from engineered cells. However, just because it is technically feasible to move completely away from biopharming does not mean that it will be easy. And while there has been progress in eschewing animal-derived products in some areas, such as with insulin, others, like synthetic antivenom or vaccine production, have been less straightforward. Solutions to these require not only mimicking what animal biology does naturally but doing so at scale.

Moving away from animals

In ancient times, the Phoenicians – and the Greeks and Romans after them – made Tyrian purple dye in the world’s first chemical industry. They began by carefully extracting hypobranchial glands (which produce various compounds, including colorful pigments) from the inner roof of the snails’ shells and letting them ferment in an airtight container. From here, they would either purify the mixture and dry it for use as a pigment or employ the fermented glands directly on fabric. In this latter process, it was crucial to monitor the pH, otherwise the cloth would be at risk of felting, or clumping together, in overly alkaline solutions. Careful management of exposure to sun and air was similarly essential, so that the mixture could oxidize enough to develop the desired purple shade, but not so much that it turned blue.

At the height of production, so many snails were killed to make dye that a heap of discarded shells in the ancient city of Sidon was said to have ‘created a mountain 40 meters high’. But when Constantinople fell to the Ottoman Empire in 1453, the knowledge required to make Tyrian purple vanished for the next 550 years.

Two popular theories explain how the knowledge was lost. The first suggests that when the Byzantine Empire fell, the Catholic Church – whose cardinals wore garments dyed with Tyrian purple – lost access to its large dye factories and were forced to transition to cheaper red garments, thus diminishing demand for the dye.

Another theory suggests that the Romans overharvested Tyrian snails, diminishing their populations to the point of collapse shortly before the Ottomans arrived. Regardless of which explanation is true, the result was the same: knowledge surrounding the production of Tryrian purple lapsed and the method fell out of fashion.

More often, it is not lost knowledge that precipitates a move away from biopharming, but instead the discovery of a more efficient alternative.

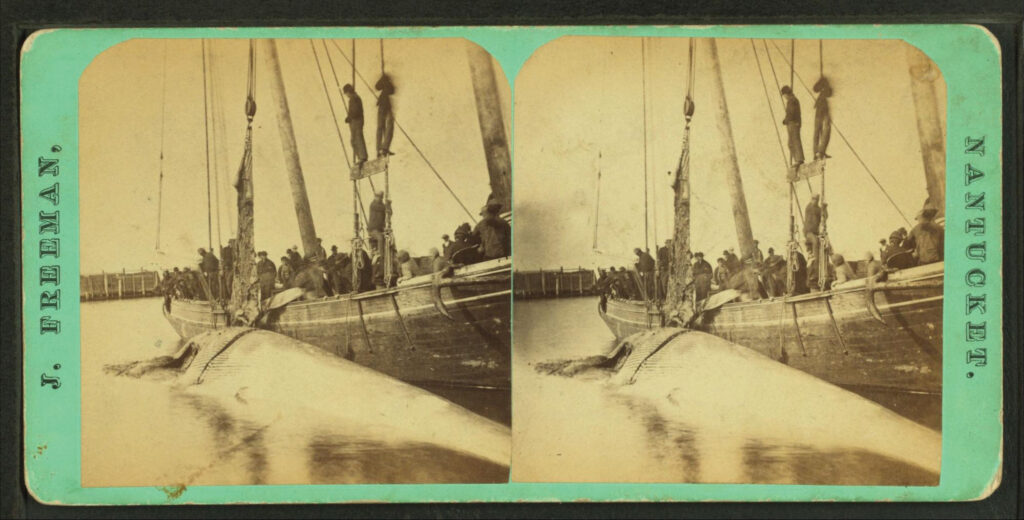

In the 1830s, the United States was home to the world’s largest whaling industry, centered around Cape Cod in Massachusetts. More than 10,000 sailors took to the Atlantic each season, predominantly hunting right whales and humpbacks that stayed closer to shore.

After the humpbacks and right whales died off, many sailors went on yearslong voyages to hunt down the more elusive sperm whale. A 15-meter-long sperm whale contains about three metric tons of oil in its spermaceti organs, an open cavity above the jaws. Whale oil was highly prized because it could be used to make candles that burned brightly and without the odor emitted by burning lard.

In the 1850s, Scottish chemist James Young figured out how to make paraffin wax from coal and oil shales at commercial scales, reducing the market for spermaceti. Just as well, because at its peak in the mid-nineteenth century, whalers killed over 5,000 sperm whales a year. There were an estimated two millions sperm whales in 1712, before the start of commercial whaling. Today, there are about 850,000 sperm whales; a decline of approximately 57% in 310 years. It’s estimated that, by 2001, 12 years after whaling had ended in nearly every country, there were around 99 percent fewer blue whales, as well as 89 percent fewer right whales and bowhead whales, than in 1890. Populations have barely recovered today.

This same trend, wherein animal-based manufacturing gives way to more humane and efficient alternatives, has also played out in circumstances where we could still readily use animals, but choose not to.

Consider the steep rise and precipitous fall of pigs used for insulin production.

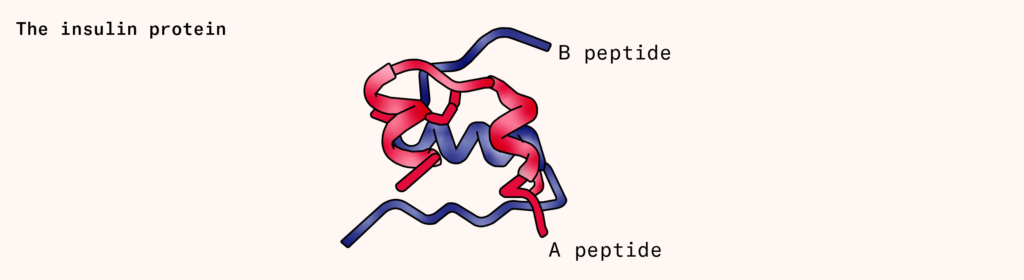

Insulin is a small protein (actually two small, interlocking proteins called A and B), produced by the pancreas that signals cells to take in sugar from surrounding blood. People with diabetes either don’t produce enough insulin (type one), or their cells don’t respond to insulin appropriately (type two). This causes sugar to build up in the bloodstream, slowly damaging the eyes, kidneys, and nervous system.

In 1922, Dr. Frederick Banting and his student, Charles Best, discovered that the pancreas contains a compound, later identified as insulin, that could reduce blood sugar levels in humans. In their original paper, the men describe how they gave dogs a lethal dose of chloroform before swiftly excising and macerating the ‘degenerated pancreas’, only to then filter the substance through paper and inject it into the veins of a 14-year-old boy. Crude as it was, they wrote that ‘Fortune favored us in the first experiment’. The extract successfully reduced the amount of sugar excreted in the boy’s urine.

That same year, the Eli Lilly company in Indianapolis struck an agreement with Banting and Best to mass-produce insulin by harvesting animal pancreases. By 1923, the company was selling Iletin, the first American insulin product to treat diabetes. Eli Lilly quickly came to dominate the US market, with $160 million in annual revenue by 1976.

Around the same time, Eli Lilly executives became concerned when a graph began to circulate around the company. It showed two lines: one tracing the available supply of pig and cow pancreases, and another tracking the rate of diabetes prevalence in the USA, which was then rising by about five percent each year as more people were being diagnosed and surviving for longer. Its implications for the insulin market were alarming: Eli Lilly would soon fall short of demand.

To circumvent this shortfall, the company organized an insulin symposium, inviting the brightest minds from the pharmaceutical and molecular biology fields to come together to discuss whether genetic engineering could be used to make insulin. What if it were possible to isolate the gene encoding insulin in human cells, say, and insert it into living bacteria? Could the microbes be induced to manufacture the molecule? And would such a molecule be chemically and functionally equivalent to the human version? By 1978, a scientist named David Goeddel, along with his colleagues at Genentech, a biotech startup in San Francisco, had provided an answer to all three questions. They used chemistry to build human insulin genes (chain A and B), one nucleotide at a time, and inserted them into Escherichia coli cells. The engineered microbes began making human insulin proteins.

Not only had the Genentech scientists created human insulin in microbes, but they had managed to create a product that was even more reliable than the extracts derived from animals, whose potency varied up to 25 percent per lot. And whereas some diabetics responded to animal insulin with allergic skin reactions, reactions to synthetic forms of insulin were rare.

The understanding of biology that crystallized in this period was this: all animals are made of cells that encode their genetic material in the form of DNA. If scientists can identify the genetic sequences responsible for a particular molecule, be it an antivenom, an antibody, or a purple dye, then those sequences can be spliced into cells to produce molecules in the laboratory.

Making molecules

As improbable as it may seem, all life on Earth stems from the same organic molecules. Some of these molecules, known as nucleotides or bases, but perhaps more familiar as A, T, C, and G, act as the building blocks of genetic information. The DNA belonging to a puffer fish is chemically identical to the DNA of a tree. The order of their bases differ, of course, but the DNA molecules found in one organism are often interpretable by other life-forms. A gene encoding insulin in a person can be cloned, inserted into microbes, and quickly propagated in the laboratory. Microbes reproduce quickly – E. coli cells split in two every 20 minutes – and so can make vast quantities of biological products on short timescales.

The first step in making an animal product in the laboratory is to identify the genes responsible. But this is easier said than done. Genes are not merely strings of DNA that encode proteins, as students are taught in school. Some genes make RNA, for example, that are never converted into proteins at all; instead, they control the expression of other genes in the genome.

It used to take months or years for scientists to figure out which particular strings of DNA were responsible for making a particular protein in cells. Although DNA’s atomic structure was resolved in 1953, the first gene, encoding a coat protein, or outer shell of a bacteriophage, was not fully sequenced until 1972.

Techniques to sequence DNA slowly percolated through academic circles in the late 1970s. The first commercial, automated DNA sequencer was released in 1987. In 2001, it cost about $100 million to sequence three billion bases of DNA in the human genome. Twenty years later, the same feat cost about $700.

A gene, however, is little more than a string of bases: As, Ts, Cs, and Gs. And a DNA sequence alone is not always sufficient to infer the function of the protein it encodes. Just by looking at the insulin gene’s sequence, for example, one would not necessarily know that it encodes a protein that lowers blood sugar levels. That observation must be deduced experimentally.

Emerging AI tools, such as AlphaFold 3, can predict the structure of a protein from a DNA sequence, but a protein’s structure doesn’t always indicate its function. The tried-and-true way to figure out a gene’s function is to delete it from the genome and then watch what happens to the organism. If you delete the insulin-coding gene, blood sugar levels spike.

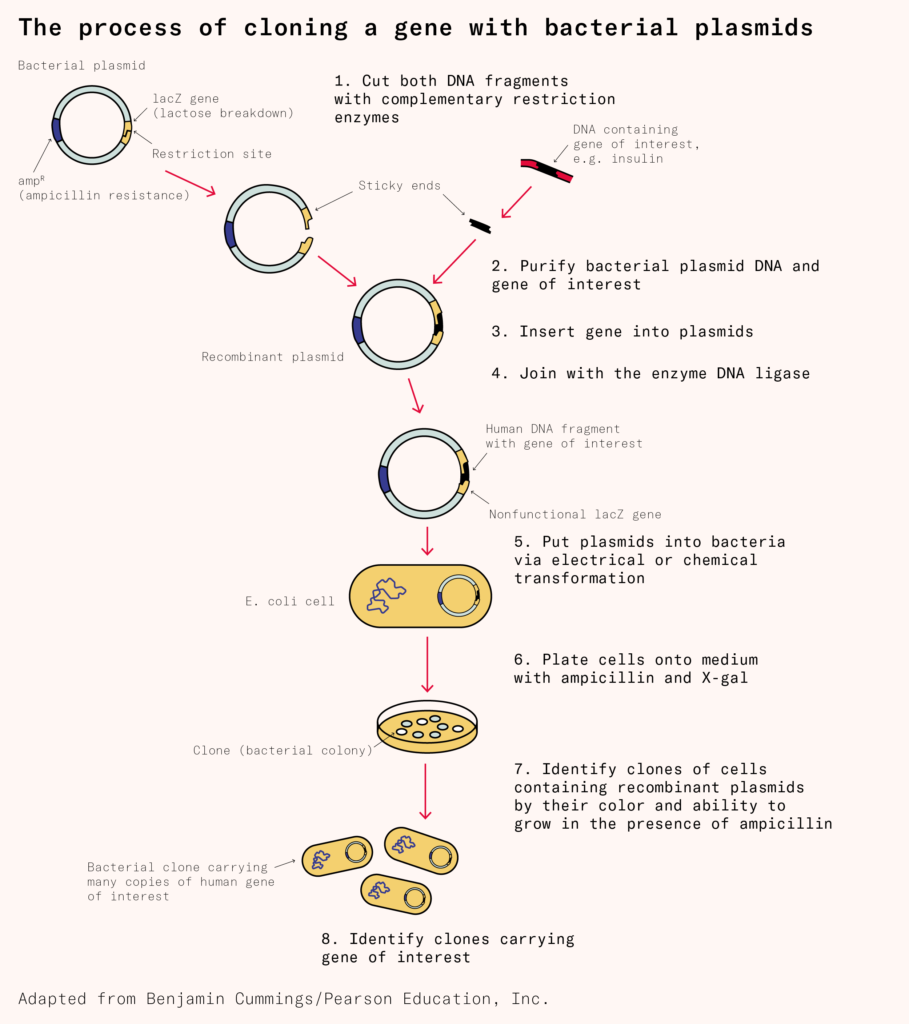

Once scientists determine the gene responsible for a particular protein, such as an antibody or insulin, that gene can be cloned and inserted into quickly dividing cells in the laboratory. The basic technology to do this has existed for more than 50 years, and there are three overarching steps: first, scientists make DNA that encodes a protein of interest, such as insulin or antibodies or antivenom. Then, they insert that DNA into living cells. Molecular machines inside of the cells will ‘read’ the DNA and transcribe it into messenger RNA, which ribosomes – large enzymes made from proteins and RNA – then use to assemble proteins. And third, scientists kill the cells and isolate the proteins.

The first step in this process was cracked in 1972, when a graduate student at Stanford University named Janet Mertz isolated DNA strands from two distinct organisms and then cut them with a restriction enzyme, a type of protein that slices DNA molecules in precise locations. Restriction enzymes leave behind sticky ends, or short overhanging sequences, that can be joined together to create a chimeric strand of genetic material. Mertz’s experiment suggested, for the first time, that DNA from a horse, penguin, or jellyfish could be cut and pasted into a bacterial genome, for example.

The following year, two groups at Stanford and the University of California, San Francisco, repeated Mertz’s experiment, but took things a step further by inserting the chimeric DNA into E. coli cells. The engineered microbes successfully copied and propagated the chimeric DNA.

At the time of Mertz’s experiments, the only way to obtain a particular DNA sequence was to obtain physical access to the relevant organism, be it a horse, whale, or something else entirely, and then use molecular scissors (enzymes that precisely cut DNA at specific locations) to cut out the desired gene from frozen tissue.

Today, companies such as Twist Bioscience can synthesize DNA for about $0.07 per base. The average human gene has a protein-coding sequence stretching a bit more than 1,000 bases in length, so it costs a bit less than $100 to chemically synthesize an average-sized human gene. It is no longer necessary to obtain physical samples of genetic information. One can simply search for a gene in a public database, download the sequence, and then order it from a DNA synthesis company.

Once scientists obtain a DNA sequence, either the old way, by cutting it from animals, or the newer way, by synthesizing it chemically, they splice that into a loop of DNA, called a plasmid, which they then insert into living cells. Scientists commonly make this insertion through the use of either chemicals or electricity.

The chemical approach, invented in 1970, uses calcium ions to coat negatively charged DNA strands and push those strands past a microbial cell’s membrane. The electrical approach, called electroporation, was first described in 1982 and works by zapping cells with electrical pulses. Each zap punches little holes in the membrane, which DNA can pass through.

Once you have got DNA encoding, say, a human insulin gene into a cell, the cell will begin to read the genetic information and churn out human insulin. The cells also divide, copy the loops of DNA, and pass the insulin genes onto their progeny. After a few hours, one transformed cell becomes billions of clones, each carrying the engineered DNA and making lots of human insulin. The same basic technology can be used to make antibodies and other proteins, too.

Not all cells are well-suited to making all molecules, though. When Genentech made human insulin, they used E. coli bacteria. But today, many drugs are made using mammalian cells instead.

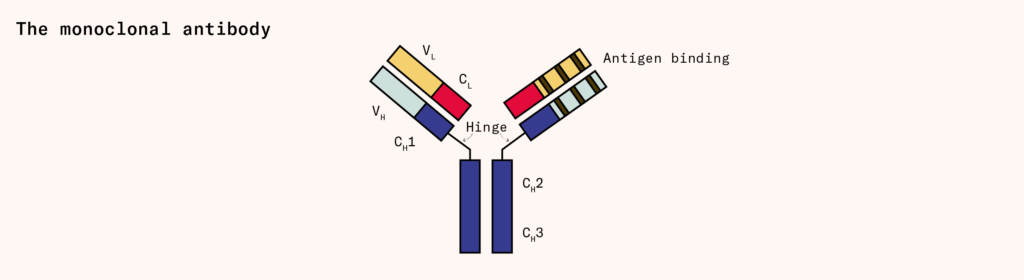

Monoclonal antibodies, for example, are Y-shaped proteins that tightly bind to specific molecules. Each of these antibodies is made from two proteins, called the light chain and heavy chain, which interlock to form the complete antibody.

Many of the most widely prescribed pharmaceutical drugs, including the immunosuppressant adalimumab (sold as Humira, and generating over $21 billion in sales in 2021) and cancer therapy pembrolizumab (sold as Keytruda, generating over $17 billion in the same year) are monoclonal antibodies. But only a few different organisms, mainly mammalian cells and yeasts, can make them because antibodies are glycosylated, or tagged with sugar molecules, and bacteria cannot naturally perform this reaction.

Most monoclonal antibodies are generated using Chinese hamster ovary, or CHO, cells, which initially descended from cells taken from a hamster’s ovary and were later immortalized. Robert Briggs Watson, a Rockefeller Foundation field staff member based in China, smuggled these hamsters out of the country on one of the last Pan Am flights from Shanghai, just before Mao Zedong and the Communists took over. Today, CHO cells make about 70 percent of all therapeutic proteins sold on the market.

Once CHO cells are transformed with genes encoding the heavy and light chains for a particular antibody, they begin to express the genes and make the proteins. One liter of CHO cells can generally produce about four grams of antibodies, but this number varies widely, from mere milligrams to more than ten grams per liter, based on the specific antibody and culture conditions.

The final step in biomanufacturing is scale. Cells that generate the most antibodies in small volumes are transferred into large, steel bioreactors, generally up to 10,000 liters in volume. Each bioreactor has spinning blades inside that agitate the cells to ensure they are well-mixed and oxygenated. After waiting several days for the cells to secrete antibodies to a high concentration, scientists remove the cells and purify the antibodies.

Reasons for animals

If most animal products today can be made by means of biotechnology, then why do we still use animal products to make flu vaccines, tests for microbial contamination in drugs, and many antibodies? Three prevailing reasons come to mind, which we’ll call regulatory lock-in, molecular complexity, and ease of scaling.

Horseshoe crab blood is an example of regulatory lock-in: though we have known how to make synthetic alternatives for decades, regulators have only recently approved them. Every year, biomedical companies along the eastern coast of the United States continue to collect and drain blood from hundreds of thousands of horseshoe crabs for use in endotoxin tests.

Horseshoe crabs’ characteristic blue-colored blood contains a molecule called LAL, or limulus amebocyte lysate, which forms a gel-like clump when exposed to bacterial molecules called endotoxins. Endotoxins are long molecules made of sugars and fat that stick on the outer walls of some bacteria and, if introduced into a patient, can cause septic shock. The LAL in horseshoe crabs’ blood allows scientists to detect the presence of these endotoxins in medicines and vaccines, and filter them out.

Synthetic versions of LAL have been made using recombinant DNA technology since the 1990s. And in 2020, two important shifts happened: Eli Lilly tested all of their Covid-19 antibody medicines using synthetic horseshoe crab blood, and the European Pharmacopoeia, a nonprofit organization that evaluates medical products for safety, approved the use of synthetic alternatives. Academic reviews comparing synthetic versions to naturally derived horseshoe crab blood confirm that ‘the recombinant technologies are comparable in protecting patient safety’.

Despite this, the US has been slow to adopt synthetic alternatives. It was only in late July of this year that the US Pharmacopeia finally approved language to permit ‘the use of non-animal-derived reagents for endotoxin testing’. How quickly this shift will take place is anyone’s guess, but considering how dependent we have become on animal-derived LAL, it is naive to assume that the switch will be rapid.

In the case of molecular complexity, consider antivenoms. The method to make antivenom has not changed much over the last 100 years. Venom is first milked from snakes and then injected into horses or sheep, which then produce multiple different antibodies and antibody fragments that bind to the venom. These are known as polyclonal antibodies. Blood is collected from these animals, the antibodies are purified from the plasma, and they are then given to people bitten by venomous snakes.

There are a few problems with this approach. In particular, polyclonal antibodies are difficult to make synthetically because they are concoctions of molecules, and finding the correct antivenom often requires identifying the precise snake species responsible for the bite. It’d be far better to create synthetic antivenoms that could neutralize many different types of venoms, which we call multiplex antivenoms.

We have made some progress toward these multiplex antivenoms, but commercially available versions are likely many years away. A recent study, published in Science Translational Medicine in February, reported the discovery of a human monoclonal antibody that could neutralize long-chain three-finger-neurotoxins, basically molecular tags found in many different antivenoms, in cells. This antibody, injected into mice, protected the animals against otherwise lethal doses of venom. And because it is a monoclonal antibody, rather than a mixture of antibodies, it can be readily manufactured using recombinant DNA technology and the Chinese hamster ovary cells we rely on in so many other circumstances. The monoclonal antibody must be tested in human trials, but it’s an important step toward building broadly neutralizing antivenoms at scale.

This brings us to the issue of scale. Animals are still used to make medicines and vaccines because that is sometimes still the simplest and cheapest approach. This is evident in how we produce seasonal flu vaccines.

Since at least 1931, vaccinologists have used fertilized chicken eggs to make influenza vaccines. Two scientists at Vanderbilt University, named Ernest Goodpasture and Alice Woodruff, discovered that eggs injected with a small amount of virus were ideal vessels for enabling those viruses to multiply and propagate. After a few days of incubation, during which viruses build up in the egg, a small hole is punched through its shell, and the fluid within is removed. From here, viral particles are carefully purified and inactivated, after which they can be mixed in cocktails and injected into humans.

A single egg can produce several milligrams of vaccine, and while it might seem like a large bioreactor could make more, there are only about 61 million liters of global biomanufacturing capacity, of which only 10 million liters are unreserved. And not only that, but the reactors themselves can only be so large before the cells inside die from lack of proper oxygenation. In light of this, eggs are comparably cheap and easy to come by: in 2023, the US vaccine industry used less than one percent of the 110 billion eggs produced that year.

Of the roughly 175 million flu vaccines administered in the US in 2020, about 82 percent were manufactured using eggs, according to the CDC. Still, this approach has several drawbacks. First, the viruses can mutate during their propagation, leading to vaccines with low efficacy. A 2017 paper, for example, found that the H3N2 virus strain used for the 2016–2017 flu vaccine carried a mutation that meant that the antibodies it elicited had poor binding abilities against flu strains circulating through the human population.

In 2021, researchers also found that people injected with influenza vaccines were developing antibodies against part of the egg, rather than to the virus infecting the egg, which could make it more difficult for a person’s antibodies to recognize and respond to actual flu viruses.

For these reasons, influenza vaccines made in eggs are significantly less effective than vaccines made from engineered cells. However, until we can find a way to produce 175 million doses per year in bioreactor tanks, moving away from eggs will remain challenging.

Were we to circumvent the difficulties of regulatory lock-in, molecular complexity, and scale, biopharming could readily give way to synthetic alternatives. This would be a welcome shift not only from the lens of animal welfare but also efficacy: synthetic versions are chemically identical, or even superior to, their natural analogs.

Animal-derived insulin was never particularly pure. Even after Eli Lilly chemists invented a method, called isoelectric precipitation, that increased the purity of insulin extracted from cows and pigs by between 10- and 100-fold, analyses showed that the purified samples still contained various unwanted peptides and contaminants. Synthetic insulin is much less likely than animal insulin to trigger serious immune responses and reactions at the injection site.

A similar story has played out for seasonal flu vaccines. Research comparing the efficacy of egg-versus cell-based vaccines, as administered to more than 100,000 people over the period of three flu seasons, found that cell-made vaccines were consistently superior.

The shift to synthetic alternatives, then, will be a move toward molecules with the potential to be both more potent and reliable than their ‘natural’ counterparts.

Even as biotechnology transforms how we derive molecules, however, we’d do well to acknowledge that the field was formed by, and continues to be built on the back of, the exploitation of biological diversity.

CHO cells, used to make antibodies, were isolated from Chinese hamsters smuggled out of that nation in the 1940s. Modern polymerase chain reaction, a linchpin technology used in Covid-19 diagnostics, was only possible because of enzymes discovered in heat-tolerant microbes living in Yellowstone National Park. And the first protein structure was solved by purifying myoglobin from sperm whale blood samples stored in a freezer at Cambridge University.

In recent decades, sequencing technologies have made it easier and cheaper than ever to decode genomes and upload the sequences to databases. It is no longer necessary for scientists to collect DNA from animal tissues directly since they can now download the sequences online and order them from DNA synthesis companies.

There is one interpretation of this that might lead us to become less concerned about the future and plentitude of the animals we exploit for biomanufacturing. Our ability to synthesize compounds means we no longer need fresh animal tissues to extract information about their DNA or 12,000 snails to make a gram of brilliant purple dye. However, as habitats vanish and animals go extinct, biotechnology’s potential to discover and exploit new tools, and new genome sequences, will also diminish. So while we can revel in the discoveries we have already made and trends that show a move away from biopharming, we should remain vigilant about protecting wild nature.

After all, CRISPR – part of a bacterial defense system that was first adapted into a gene-editing tool in 2012 – was initially discovered by Francisco Mojica, a Spanish researcher, while studying an obscure (and rare) species of archaea called Haloferax mediterranei. When humans destroy ecosystems, they also destroy genetic information that could yield future breakthroughs. Biotechnology is not independent from the natural world – it’s enmeshed within it.